With a business name like Light Field Lab, the company is frequently asked: “Just what is a light field, anyway”? Responding to this simple question is a conundrum since the answer is simultaneously an intuitive concept -- well-understood for at least 500 years --while at the same time a complex optical phenomenon that can only be explained with unfamiliar terms like “plenoptic function” and “integral imaging”. To better understand this paradox, let’s start with a look at the miraculous capability of human vision.

How Humans See the World

Everything we see around us is the result of light originating from a source of illumination, such the sun, bouncing off various objects. On the surface of each object, some photons are absorbed while others are reflected, often in many different directions, sometimes bouncing off another surface, repeating this process until it finally reaches our eyes. Shiny surfaces will reflect most of the light falling on them, while dark matte surfaces might absorb most of the light. Some portion of this rich and complex combination of light rays will finally arrive at our eyeballs from all directions, having reflected off multiple surfaces. The resulting “image” is captured by our retina and processed in the brain as part of the complex human visual system.

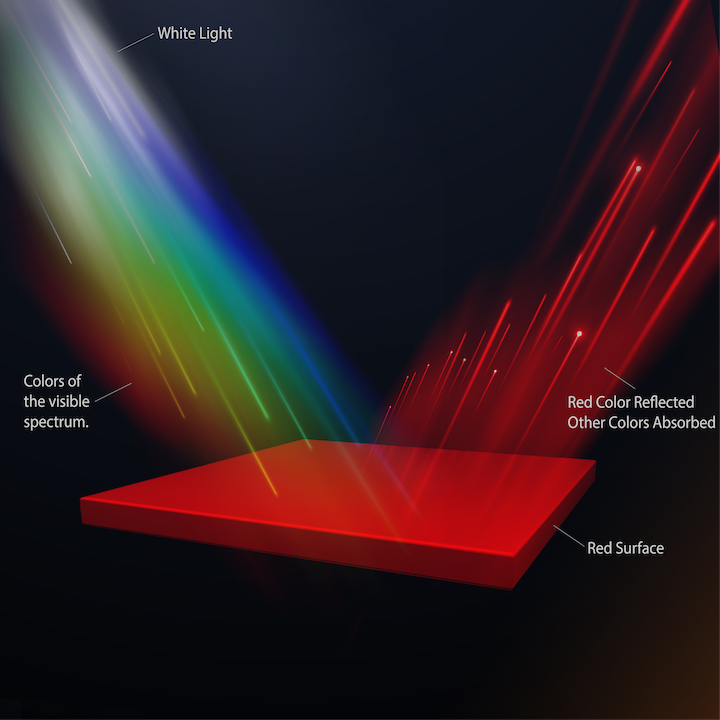

We perceive different colors because light is traveling at different wavelengths,which are simply the distance between crests or troughs of a light wave. While waves of water in an ocean might be 100 feet apart, waves of electromagnetic energy in visible light range from about 400 nanometers (for violet) to 700 nanometers (for red). A nanometer is one-billionth of a meter, so roughly 100 wavelengths of visible light fitwithin the width of a human hair. When we view an object, the perceived color is the result of the spectrum of the original light source, as modified by the reflective properties of the object itself. For example, an apple under noontime sunlight appears red because blue and green wavelengths are absorbed in the surface, while red wavelengths between roughly 550 and 600 nanometers bounce off the apple’s surface to reach our eye.

Collectively, your visual system perceives that you are looking at some portion of the world around you – perhaps just an apple, but maybe a forest meadow, a busy downtown street or a book. But what you’re actually observing is a light field, consisting of an unfathomable number of light rays reflecting off all the objects around you. What if there was some way to capture these rays of light, without the objects even being there?

A Magic Window

Science fiction authors have teased our imagination with the idea of a machine that could reproduce the environment around us in a manner indistinguishable from reality. The most recognized example is, of course, the famous Holodeck from "Star Trek", a fictional device which at first appears to be a normal room but, when enabled, creates a realistic 3D simulation of a real or imaginary setting. But let’s look at something even easier to understand: Imagine sitting in a room and viewing an expansive landscape outside a large picture window. Now imagine that this is a “magic” window with the ability to capture each light ray passing through the glass, while memorizing the exact parameters of each photon as it transmits through the window. Finally, imagine our magic window can reproduce these photons later, and thereby reconstruct the beautiful landscape in perfect fidelity. Since the entire light field was captured, you would be able to walk around the image and see alternate viewpoints, just like in real life, and shift your focus from nearby objects to the horizon. This is the concept of how light field imaging will soon be used to capture and reproduce reality –without need of headgear or glasses.

A Brief History

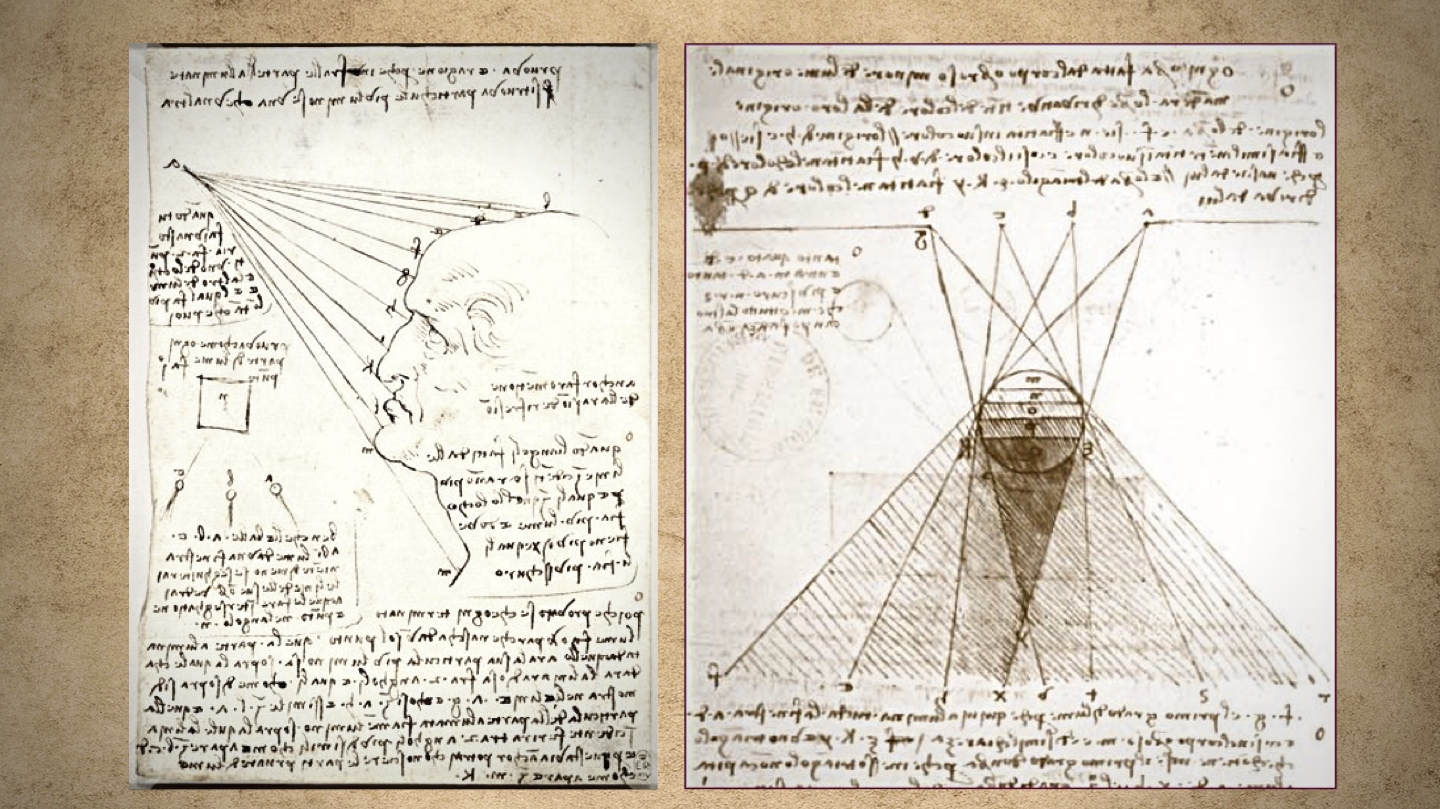

Today, the term “light field” is often thought of as a recent discovery, but this is not at all the case. As in our examples above, the concept of how we see the world has been intuitively understood for much of the history of humanity. Back in the 16th century, Leonardo da Vinci described this concept of a light field in his manuscript on painting: “The air is full of an infinite number of radiant pyramids caused by the objects located in it. These pyramids intersect and interweave without interfering with each other.…The semblance of a body is carried by them as a whole into all parts of the air, and each smallest part receives into itself the image that has been caused.” Today, we tend to think of radiance in terms of light rays rather than pyramids, but the concept remains the same.

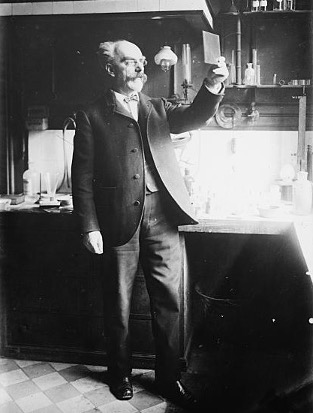

By 1908, research had progressed from representing light fields in oil and watercolor painting to sophisticated photographic techniques. The innovation of capturing light field images in 3D photographs was initially demonstrated by Gabriel Lippmann, a pioneering inventor and physicist. In a series of ground-breaking publications, he described what he called Integral Photographs. Lippmann’s 1908 proposal described a multi-lens photo plate “reminiscent of the insect’s compound eye” to capture light rays from multiple directions, enabling the production of a virtual, 3D image of the scene, complete with parallax and perspective shift as the viewer shifts position. In 1936, the term “lightfield” was coined by Andrey Gershun, who published a classic text in which he described the light field as “the radiance traveling in every direction through every point in space.”

Light Fields Become Digitized

Innovations continued over the next decades, including continued development of lenses, optical apertures, diffraction optics and more. But by the late 1980’s, as computers came to replace traditional photochemical imaging on film, an important question emerged: how do we quantify the complex characteristics of a light field? Or, put another way: what is the set of all things that one can ever see?

.png)

An answer was proposed in 1991 by Edward Adelson and James Bergen, who first defined what they dubbed the “plenoptic” function, a term derived from the Latin plenus (complete or full) and optic. The plenoptic function describes a ray-based model of light (or a model of radiant pyramids, if you prefer da Vinci’s terminology). The rays are characterized by the intensity of light as a function of viewpoint (directionality), as a function of time(motion), and as a function of wavelength (color). The goal would be to create a plenoptic light field camera capable of precisely capturing and digitizing all these parameters. Then, a complementary light field display could be used to recreate the image – just like our magic mirror did in our science fiction example.

Within a few years, the next pivotal milestone was achieved when light fields were fully introduced into computer graphics in 1996 by Marc Levoy and Paul Hanrahan at Stanford University. This launched a surge of innovation bridging plenoptic imaging with data processing developments, including the emergence of the first graphic processing units (GPU’s), new CCD and CMOS camera sensors, innovations in digital signal processing and data storage, and more. This combination came to be known as “computational imaging” and continues as the cornerstone of camera and sensor technology today. Computational imaging is currently a component in everything from the camera in your phone to autonomous cars and modern lightfield computational microscopes.

Going Forward

There is a famous quote that reminds us, “it’s hard to make predictions, especially about the future.” This is doubly true for immersive imaging technology. But there are a few things we do know. First, computational imaging technologies are progressing at a rapid pace and will enable amazing new features within the next few years. Second, demand for immersive imaging is growing, fueled by the entertainment industry, as well as business sectors including biomedical, autonomous vehicles, geospatial analysis and microscopy. Third, the decades of important innovations in light field imaging have been building toward a long-term objective to enable the reproduction of real-life images.

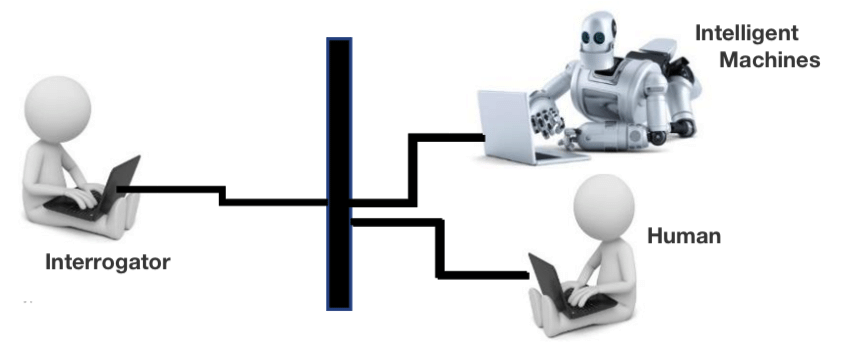

Back in 1950, computer visionary Alan Turing proposed an influential test for artificial intelligence: that a computer can be said to possess artificial intelligence if it can mimic human response under specific conditions. More recently, UC Berkeley professor of optometry, visionscience, neuroscience and psychology Marty Banks teamed up with Stanford University associate professor of electrical engineering Gordon Wetzstein (with their colleagues) and proposed a new version of a “Turing Test” for 3D and light field displays: “A person views input that comes either from a direct view of the real world or from a simulated view of that world presented on a display. He or she has to decide -- real or display?” Based on the astounding progress we’ve witnessed in past years, it now is clear that this is our current trajectory. The only question is when you won’t be able to tell the difference between a real landscape and one reproduced on a light field display.