Haptics—defined as the use of tactile sensations as a method of interacting with computers and electronic devices—has been part of our lives since pagers buzzed in our pockets, cell phones vibrated and Nintendo’s Rumble Pak immersed our gameplay. In today’s world, haptics has become infinitely more sophisticated, having evolved from force-feedback technology to electrosonic and ultrasonic audio waves and concepts of brain-computer interaction. Today’s haptics are being built into industries as diverse as fitness, medical wearables, entertainment, cars and shopping.

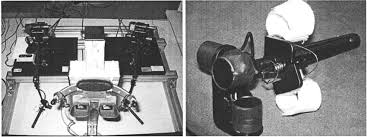

To understand how haptics has developed, you’d have to go back to 1998, when MIT’s Artificial Intelligence Lab debuted the PHANToM stylus, a force-feedback device that conveyed the shape, texture, temperature, weight and rigidity of a virtual object. “You could deform a box’s shape by poking it with your finger, and actually feel the side of the box give way,” MIT enthused when it announced the technology. “Or you could throw a virtual ball against a virtual wall and feel the impact when you catch the rebound.” The PHANToM, first described in a 1994 paper, was developed by MIT undergraduate Thomas Massie and Dr. J. Kenneth Salisbury, a principal research scientist in MIT’s Department of Mechanical Engineering and the head of haptics research. The inspiration for the device came out of collaboration between Salisbury and Dr. Mandayam Srinivasan of the Research Lab for Electronics.

This PHANToM was the first important landmark research in haptics, says USC Viterbi School of Engineering WiSE Gabilan professor Heather Culbertson, who also directs the Haptics Robotics and Virtual Interaction Laboratory. “Theirs was a seminal paper about providing kinesthetic information to a person with force feedback,” she says. PHANToM offered six degrees of freedom and three electrical actuators. Culbertson notes that force feedback got better as researchers added more degrees of freedom, used better motors and expanded the workspace.

In 1995, another MIT research paper added textures to the mix; in this case, the team used a force-feedback display to convey the texture of sandpaper. Brigham Young University Associate Professor of Electrical and Computer Engineering Daniel Smalley notes that “it was a significant step to move to tactile interfaces.” It was also an encouraging development for Light Field Lab CTO Brendan Bevensee, whose company is working on projecting holograms. “The interesting thing about haptics is that they can be used in an array to create a volumetric tactile surface,” he says. “We could add another sensory dimension by including a tactile interface, so you could reach out and have the sensation of touching the hologram.”

Haptics can be divided into three areas: force-feedback, wearable/surface and mid-air. Force-feedback devices, which rely on real-world mechanics and physics, were the first haptic devices to be developed. Culbertson specializes in textures for wearable/surface haptics, which can allow the user to feel virtual textures via the surface of a tablet computer or a wearable glove. Whereas physics-based models are simulations, she explains, her team relies on computational data. “With data, you record the vibration signals directly, and you don’t have to create a model to generate those signals,” she says. “You’re actually recording data of how wood or carpet feels.” The data behaves “a lot like audio data,” but much lower than typical audio waves. Machine learning, neural networks and other types of data modeling are used to create these kinds of haptics textures. This work is still being tested rigorously and is not yet embedded into actual products. The chief challenge here is the lack of small enough actuators for wearables. “There’s a lot of research in miniaturizing actuators but not many are commercially available,” she says.

Surface haptics can be achieved via electrostatic or ultrasonic technology. Apple just filed a patent application for electrostatic haptic output through a conducive coating on a device, which will be able to target the feedback more narrowly, to a fingertip rather than the hand. Ultrasonics, which applies sound waves with a frequency higher than the upper limit of human audibility, can also be used for mid-air haptics, which refers to touch at a distance, without actuators. “Depending on how the air is modulated, this can give you the feeling of pinpricks or raindrops or a gauzy feeling,” says Smalley. “It’s a range of sensations, and not just on your fingers but other places on your body. It’s relative low profile and versatile.” Smalley says that ultrasonic haptics, which has arisen in the haptics field in the last five years, has been demonstrated to augment 3D and 2D displays. “You can project an image on a scrim and then, through that, an acoustic wave, so the user can, in a way, feel the objects on the scrim,” he says. “3D objects can be more compelling with a kinesthetic cue.”

The challenge to reaching that Holy Grail—virtual objects in mid-air that users can touch, feeling textures—needs more and smaller transducers, says Smalley. “That way, we could more finely address air in the space and more tightly focus energy,” he says. “As we move towards the ultimate holographic display, we can improve on the strategies we’re already taking.”

Bevensee points out that some of the earliest use cases for sophisticated haptics are in automotive sensing, for such tasks as parking assistance. Culbertson is examining how textures can be used for virtual shopping, and she reports the automotive industry is also experimenting with mid-air haptics to create virtual knobs. Such haptics can also be used in gaming, where force feedback had its early start, as well as other entertainment, training, medicine and design, among many other fields.

As the technology to produce viable holographic panels continues at Light Field Lab, the techniques to add convincing mid-air haptics is also maturing. The imagery of the not-so-distant future will be virtual, ultra-realistic and sensory.